2018 bought to fore a range of changes with reference to data. The significance of information within organizations was on a rise, and so were megatrends such as IoT, Big Data and Machine Learning. Integration and governance of cloud are significant data initiatives which achieved a new high as well.

What big data has in store for 2019 hence comes across as a point of interest.

The top trends are likely to be in continuation of what was witnessed in 2018. We can also look forward to new developments which pertain to even more data sources and types. The need for integration and cost optimization will increase, and the organizations would be using even more advanced analytics and insights.

Let us take a look at the top trends in big data analytics in 2019.

1. Internet of Things (IoT)

IoT was a booming technology in 2018. It has significant implications on data and a number of organizations are undertaking efforts to tap the potential of IoT. The data offered by IoT will reach a high, and it is likely that organizations will continue to face a difficulty in putting the data to avail with their existing data warehouses.

The growth of digital twins is likely to come across issues of a similar nature. Digital twins are digital replicas of people, places or just about any kind of physical objects. A few of the experts estimate that by the year 2020, the numbers of connected servers will exceed 20 billion. In order to substantiate the value of the data, it would be essential to integrate it into a modern data platform. This would have to be achieved by the use of a solution for automated data integration, which would enable unification of unstructured sources, de duplication and data cleaning.

2. Augmented Analytics

In 2018, a majority of qualitative insights were not taken into consideration by data scientists, following analysis of large amounts of data.

But as the shift towards augmented data gains a greater prominence, systems will use machine learning and AI to yield some insights in advance. This will, with passage of time come across as an important trait of data analytics, management, preparation and business process management. It may even give rise to interfaces, wherein users will be able to query data using speech.

3. Use of Dark Data

Dark data is the information that organizations, collect, store or process as well as resulting from their everyday business activities, but are unable to use for any applications. The data is collected vastly with the intention of compliance and while it takes up a significant amount of storage, it is not monetized in any way to yield a competitive advantage for a firm.

In 2019, we are likely to see even more emphasis on dark data. This may include digitalization of analog records, such as old files and fossils in museums, following their integration into data warehouses.

4. Cost optimization of the Cloud

Migration of a data warehouse to the cloud is less expensive than saving it on-premise, but the cloud can be further optimized still. In 2019, cold data storage solutions, such as Google Nearline and Coldline will be coming into prominence. This will let organization save 50% of expenses towards saving the data.

5. Edge Computing

Edge computing refers to processing information close to the sensors and uses proximity to the best advantage. It works towards reducing network traffic and keeps the system performance optimal. In 2019, edge computing will come to fore and cloud computing will become more of a complimentary model. Cloud services will go beyond centralised servers and become a part of on-premise servers as well. This augurs well for cost optimization and server performance alike for organizations.

A few of the experts believe that with a decentralized approach, edge computing and analytics comes across as a potential solution for data security as well. But an important point to be noted in this regard is that edge computing enhances the number of potential access point for hackers. A majority of edge devices are lacking in IT security protocols as well, which makes an organization more vulnerable to hacking.

Advances in edge computing have paved the way for even more requirement of a flexible data warehouse that can integrate all data types in order to run the analytics.

6. Data Storytelling

In 2019, with more and more organizations transferring their traditional data warehouses to the cloud, data visualization and storytelling are likely to advance to the next level. As a unified approach for data comes to fore as aided by cloud based data integration platforms and tools, it would enable even a larger number of employees to reveal accurate and relevant stories based upon the data.

With an enhancement of business integration tools that enable organizations to overcome issues related with data isolation, data-storytelling will become reliable, and in a position to influence business outcomes.

7. DataOps

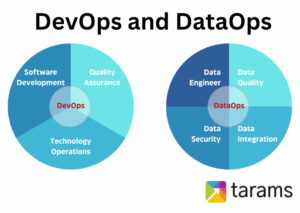

DataOps came across as a prominent trend in 2018, and is expected to gain even more importance in 2019. This is in a direct proportion of the enhancement of complexity of data pipelines, which calls for even more tools for data integration and governance.

DataOps is characterized by application of Agile and DevOps methods across the lifecycle of data analytics. This initiates from collection, followed by preparation and analysis. Automated testing of the outcomes is the next step, which are then delivered to enhance the quality of data and data analytics.

DataOps is preferred because it facilitates collaboration of data and brings about continuous improvement. With a statistical process control, the data pipeline is monitored to ensure a consistent quality of data.

In order to leverage these trends to their optimum advantage, vast numbers of organizations are coming to realize that the traditional data warehouses call for an improvement. As resulting from a larger number of endpoints and edge devices, the number of data types has increased as well. Use of a flexible data platform hence becomes imperative to efficiently integrate all data sources and types.