A study on implementation towards resolving IT tickets

Need for Sentiment Analysis

The Internet today is used widely to express opinions, reviews and comments among other things. These are primarily expressed on various topics such as current affairs, social causes, movies, products, friend’s pictures, etc.

All these opinions, reviews and comments implicitly express a sentiment that the author was feeling at the time of its expression. These sentiments range from happiness, positivity, support to anger, disdain and sadness.

Studying and analyzing these sentiments are necessary for certain individuals or groups, particularly individuals or groups about whom these opinions are being expressed. This involves going through all the comments, reviews, and opinions to gather the information to study and analyze. Physically sifting through all the messages, comments and reviews is a laborious process and there are tools available which eases the burden albeit inefficiently.

This in brief is one of the needs for Sentiment Analysis

IT Tickets

Tickets, in this context – IT Tickets, or ‘Support Requests’ are generated daily across the globe in any organization which has a customer support system in place. These are mainly to resolve the various glitches, errors or downtimes experienced when using devices that are digitally connected. The tickets or requests contain, depending on the service provider, simple fields to report a problem with minimal words and/or screengrabs/screenshots. The tickets or requests are then put through the customer support system, and they go through resolution based on the process dictated by the organization.

In any customer support system, these requests are sorted and analyzed and resolved based on the process in place. A quick turnaround in resolving the ‘support requests’ is important to retain the customer base, as a happy customer is more likely to stick to a service than an unhappy one.

Sentiment Analysis for IT Tickets

This study documents our efforts in implementing ‘Sentiment Analysis’ to sort IT tickets in any organisation to achieve faster turnaround time and quicker resolution

Our Approach

The Support requests or IT Tickets come with various requests ranging from simple to complex. They also carry a variety of emotions ranging from mild annoyance to severe discontent. A mechanism to address ‘priority’ requests is essential to ensure that customers who are very unhappy or distressed be attended prior to others. Ensuring this priority and resolving these issues is proportional to the retention of the customer base.

In analyzing the different solutions available currently, Sentiment Analysis by virtue of its approach, stood out for detecting these ‘priority’ IT tickets and was used to achieve the desired results. The process was carried out without any human interaction, hence the quick detection of ‘unhappy’ or ‘priority’ requests resulted in a quick turnaround time for resolving the tickets.

Sentiment Analysis

Sentiment Analysis deals with identifying the hidden sentiment (positive, negative or neutral emotion) of a comment, review or opinion. It is extensively used these days to understand how the general populace is feeling about a movie, a product or an event.

Identifying ‘Sentiments’

IT Ticket comments come with descriptions that are usually short and sometimes precise. The ‘objective descriptions’ of these comments seem inherently negative, but are neutral in IT Support context.

A typical example is – “The program is throwing up an error“. This statement does not necessarily emote any sentiment. The challenge lies in ignoring the objective parts of the comment and concentrate on the ‘sentiment’ part expressed in the ‘subjective part’ of the IT Ticket. A typical example for that is – “This is terrible and I am frustrated”.

Segregation based on the above theory entails a complete understanding of the product and service for which the IT tickets are being raised. This understanding enables us to identify the words, phrases and sentences that are being used to describe the ‘undesirable behavior’ or ‘malfunctioning’ of the product or service.

This, to a layman, appears very straightforward and simple, but in reality poses a serious challenge in distinguishing between the objective and subjective parts of the issue or comment.

Choosing the RIGHT approach

The approach that we chose had to be able to work on the type and amount of data we had. It also needed to be easily tunable in the future. There are two popular approaches to implement Sentiment Analysis,

Machine Learning Based

In this approach, we needed to generate a vector representation of each comment and train a model with this vector as the ‘feature vector’ and the ‘sentiment’ of the comment as the target. The trained model then would predict the sentiment polarity score of a new comment feature vector, which is then fed into the model.

Keyword Based

Here we looked for keywords and assigned sentiment scores to the text, based on the sentiment values of the keywords.

We did not use the machine learning based approach as we did not have access to ample number of comments for the training; we were able to access only around 9000 comments of which very few – below 50, were manually classified as negative.

We chose to go with the Keyword Based Approach.

Keyword Based Approach

In the keyword based approach, we used a NLTK based library which assigned sentiment polarity scores ranging from -1 (most negative) to +1 (most positive) for pieces of text.

We filtered comments to remove artefacts like personal details, URLs, email addresses, logs and other metadata since these do not have any sentiment value. The text of the filtered comment was then used in the scoring process. If any sentence had a non-alphabet content greater than 25%, then it was not taken into consideration while scoring. Such sentences usually do not contribute to the sentiment polarity of the text – due to the texts usually not being dictionary words. For example, the snippet of code: C = A + B

We took a granular approach when assigning sentiment scores to text as we specifically wanted to ignore parts of text which seemed inherently negative, but were neutral in the IT support context. The approach we used was to divide each sentence in a comment into ‘Trigrams’.

A trigram is a window with just three consecutive words. This window was slid over the words in the sentence to identify constituent trigrams. We manually went through a large collection of comments and came up with trigrams which should not be assigned a sentiment value, in the IT support context.

Any such trigrams which showed up in a sentence were ignored. The remaining trigrams were scored and we took into consideration only trigrams which had a sentiment score that was significantly different from Zero. Also, if a sentence had less than three words in it, then a sentiment value for that sentence was calculated directly and if that score was significantly different from zero, it was used for calculating sentiment score for the comment. We also manually came up with a list of such sentences that we should ignore.

In adjacent trigrams with overlapping tokens; if their scores hadn’t changed much and the score for the common part contributed the vast majority of the trigram score, then only the first trigram’s score was taken into consideration. For example, let us consider the sentence “it is frustrating to have to go over this again.” Here, the trigrams “it is frustrating”, “is frustrating to” and “frustrating to have” all have a sentiment score of -0.4 and the word ‘frustrating’ alone contributes to that score. So, we just took into consideration the score for the first trigram above and ignored the other two.

All trigrams which satisfied the conditions mentioned above were collected along with their scores. If there are no such trigrams, a sentiment score of zero was assigned to the comment. If there were any such trigrams, we then checked to see if there were one or more trigrams with a score less than or equal to a threshold value. If yes, then we took the mean value of the sentiment value of all trigrams with a sentiment score value less than or equal to the threshold and assigned this value as the sentiment score of the comment. This was done as part of an effort to aggressively go after negative comments.

Here, xi represents a sentiment score and the angle brackets denote mean value.

If there were no trigrams with a score of less than or equal to the threshold, then we took the weighted average of all the sentiment values of all the scored trigrams and assigned this value as the sentiment value for the comment. The weights were chosen in such a manner that for negative scores the weight was greater than 1 and increased as the score decreased and for positive scores the weight was less than 1 and decreased as the score increased

Here xi denotes the sentiment score for a trigram and wi denotes its weight

Results

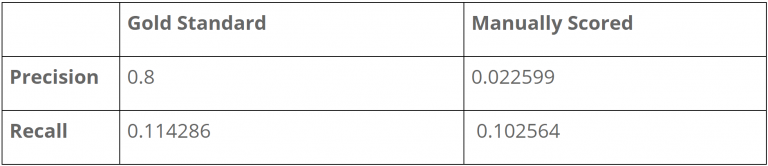

To evaluate the approach/algorithm, we needed to ensure that the comments were categorised correctly according to their sentiment. “Precision” and “Recall” are two standard pointers in analysing such results. We created a gold standard set of comments consisting of a small balanced set of negative and non-negative comments. Here, ‘Precision’, is the fraction of comments that are actually negative out of the comments which are classified as negative. The closer the precision is to 1, the fewer the number of false negatives compared to the number of true negatives. ‘Recall’ is the fraction of negative comments which are classified correctly out of the total number of negative comments. The closer the recall is to one, the higher the fraction of negative comments which are classified as negative.

Here TN represents true negatives, i.e. comments which are negative and are classified as negative. FN represents false negatives, i.e. comments which are not negative but which are classified as negative. FP represents false positives, i.e. comments which are negative, but are classified as non-negative.

The results are as displayed below

The reason why the precision is so small on the second set is that the vast majority of tickets are non-negative and there will be a certain percentage of these which are wrongly marked as negative by our algorithm. This number is large compared to the number of negative comments which are marked as negative.

We also ran the raw comments through the library we used for Sentiment Analysis and got the following results:

So, we can see that our algorithm involving trigrams and assigning scores according to the above mentioned procedure vastly improve the Sentiment Polarity Prediction process over assigning scores to the raw comments all at once.

Conclusion

We came up with a commendable method to ignore the objective parts of an IT support ticket by coming up with a list of text snippets which did not typically have a sentiment value in the IT support ticket context. The current method of assigning sentiment scores is lexicon based and relies on keywords to which a sentiment score is attached. This might not be able to pick up subtle ways of expressing negative sentiment which a human reader would easily pick up.

Machine learning methods would pick up most of such expressions. But, we, unfortunately, did not have enough data or a balanced set to run supervised learning algorithm

We managed to get a good performance out of a tool which was not explicitly developed to deal with the difficult task of separating out the objective and subjective parts of an IT support ticket and then assign a sentiment score to it.

Jyothish Vidyadharan

Jyothish is an ML Engineer working with Tarams for over 5 years. He is passionate about technology and coding

Babunath Giri T

Babu is an engineer who’s managerial skills have been helping Tarams tackle projects and clients with much success. He has been a part of Tarams for over 3 years.